How to import Kaggle datasets directly into Google Colab?

Kaggle is a popular online platform for data science and machine learning competitions, datasets, and tutorials. You can find high-quality data on Kaggle to practice data analysis. I have uploaded some of my data on Kaggle to share it with others.

Recently, I’ve begun learning machine learning, and one of the most fundamental datasets for this purpose is the Titanic dataset. By visiting the website below, you can download the Titanic survivor data and practice machine learning with this foundational dataset.

https://www.kaggle.com/competitions/titanic/overview

Then, I have a question. Do I have to download the data from Kaggle one by one?

Today, I’ll introduce how to import Kaggle datasets directly into Google Colab.

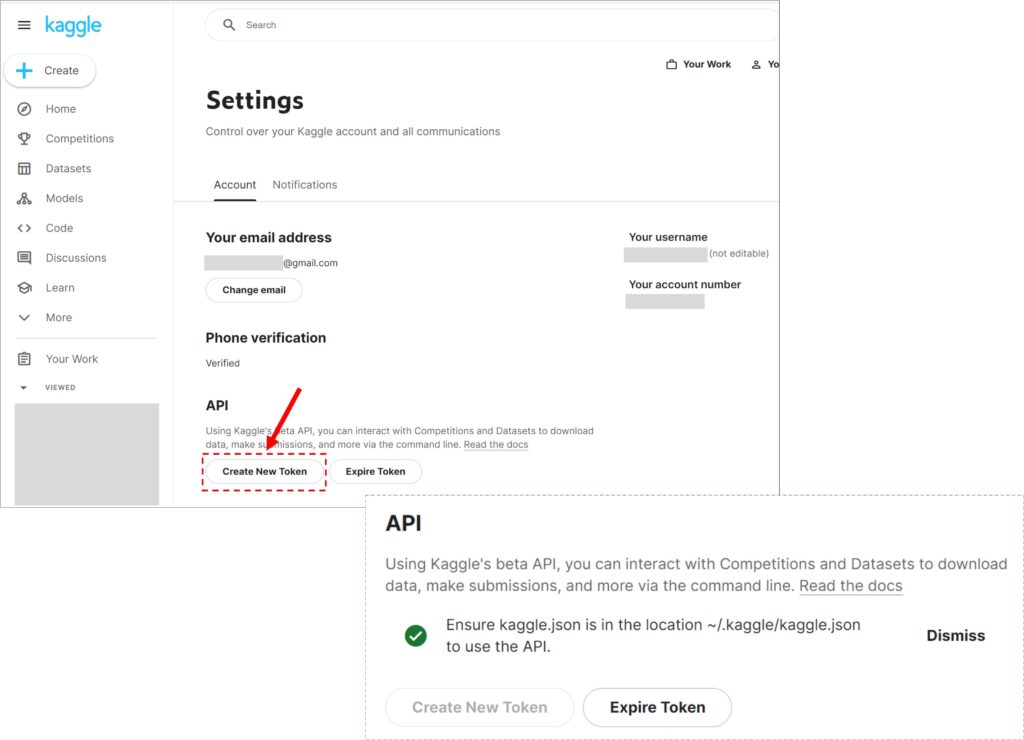

1) to create API Token

You need to create Kaggle account. After logging in, go to Settings, and click Create New Token. Then, the JSON file, kaggle.json is automatically downloaded.

2) set up the Kaggle API on system

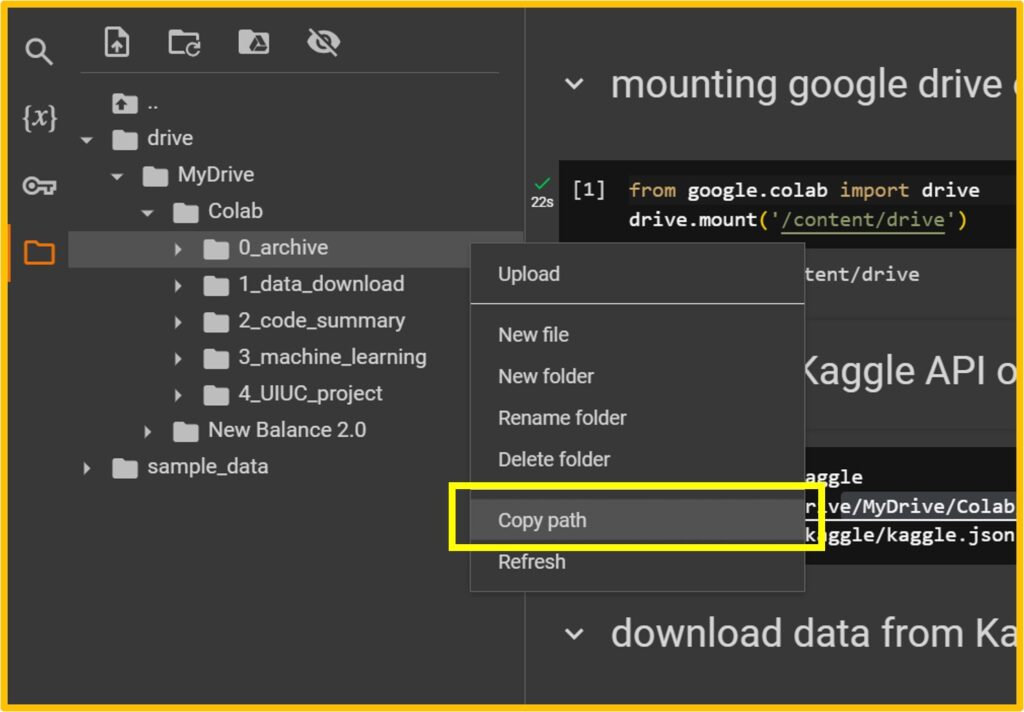

I saved the file kaggle.json in my google drive, and file path is /content/drive/MyDrive/Colab/0_archive

Then, please write the below code.

! mkdir -p ~/.kaggle

! cp /content/drive/MyDrive/Colab/0_archive/kaggle.json ~/.kaggle

! chmod 600 ~/.kaggle/kaggle.jsonTo perform this process, first, you should mount Google Drive on Google Colab. Please refer to the post below if you want to learn how to mount Google Drive on Google Colab.

□ How to use Google Colab for Python (power tool to analyze data)?

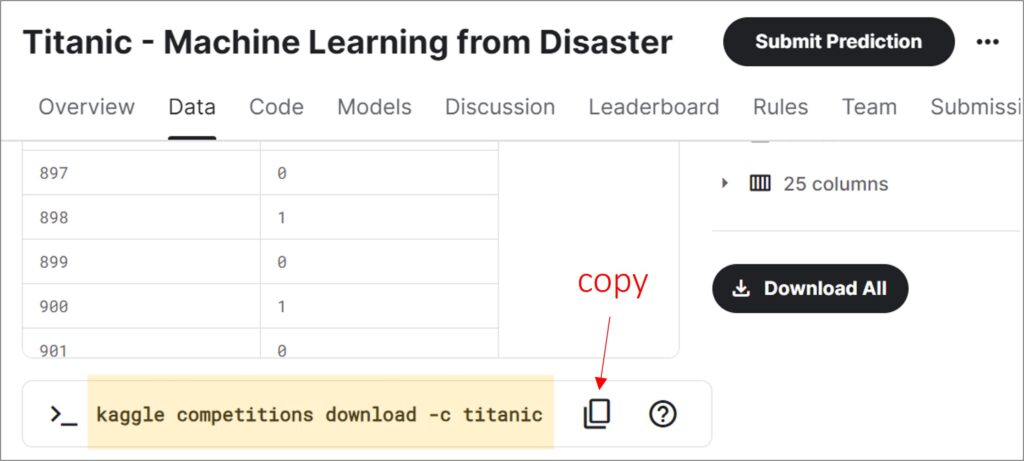

3) Copy API to import data from Kaggle

If you go to the Titanic dataset on Kaggle, navigate to the Data tab and scroll down, you will find the Kaggle API about the data.

In your Google Colab, please write the following code:

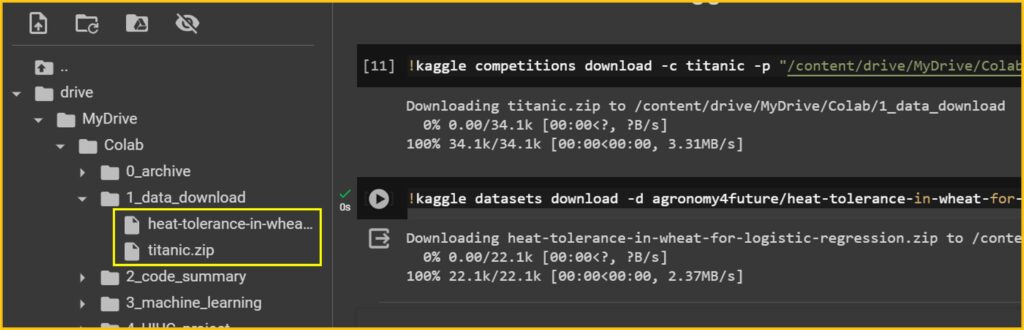

!kaggle competitions download -c titanic -p "/content/drive/MyDrive/Colab/1_data_download"You can write just !kaggle competitions download -c titanic, but I want to download the data to a specific folder (/content/drive/MyDrive/Google Colab/1_data_download) , so I added -p "/content/drive/MyDrive/Google Colab/1_data_download". Remember to put ! in front of the command to use the Kaggle API.

Then, the data will be downloaded to the file path you set up.

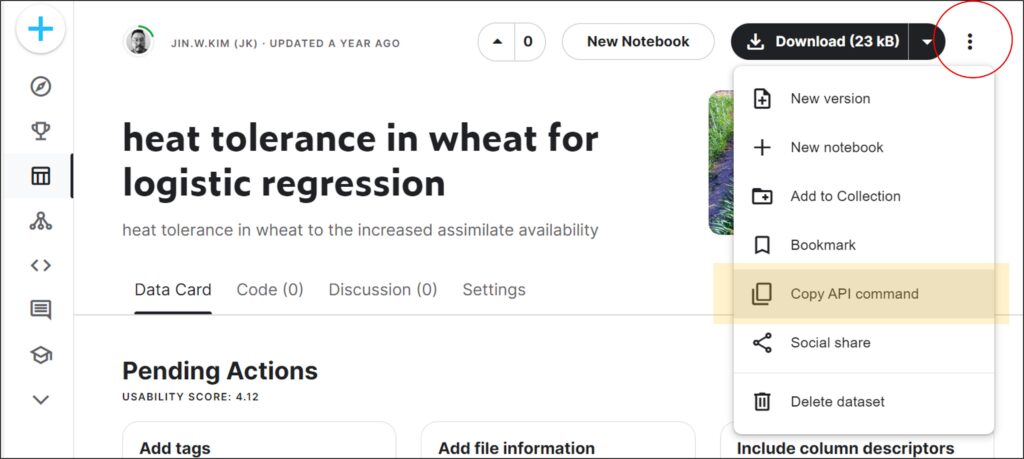

Sometimes, you cannot find the Data tab. The below is my data I uploaded to Kaggle. Here I can’t find the Kaggle API.

In this case, please click the three dots icon ⋮ located in the upper-right corner, and then click on “Copy API command“. This will copy the API command for this dataset.

kaggle datasets download -d agronomy4future/heat-tolerance-in-wheat-for-logistic-regression

Then, please write the following code:

!kaggle datasets download -d agronomy4future/heat-tolerance-in-wheat-for-logistic-regression -p "/content/drive/MyDrive/Colab/1_data_download"Then, the data will be downloaded to the file path you set up.

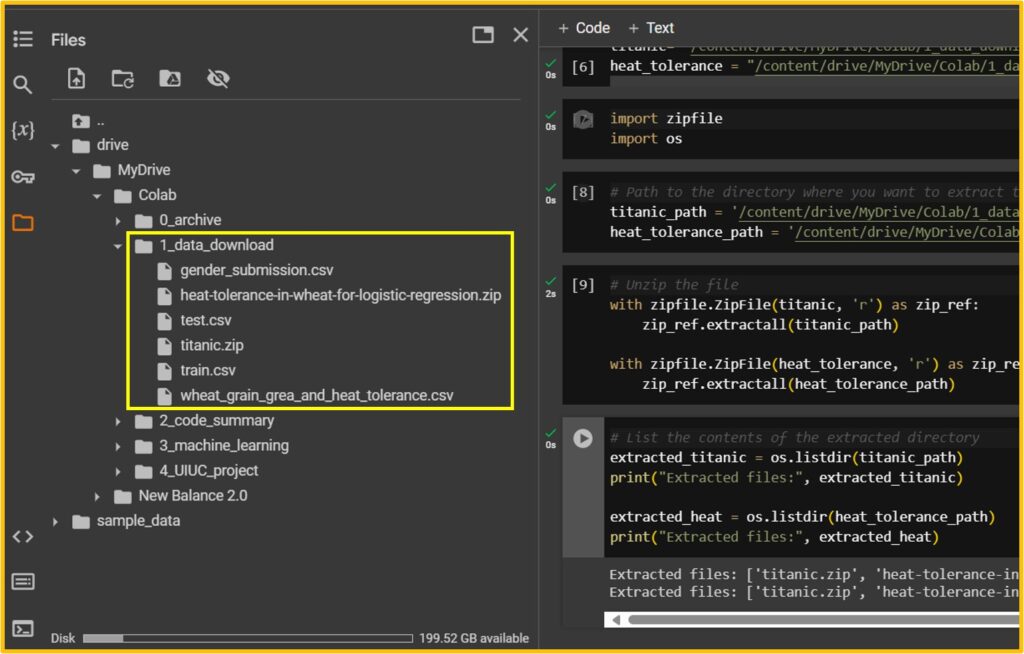

4) Extract zip file

The data is downloaded as .zip file even though it is a single file. So, we need to unzip the file.

First, I’ll copy the file path of the data. Right-click on the file name and choose Copy path.

# heat tolerance data

/content/drive/MyDrive/Colab/1_data_download/heat-tolerance-in-wheat-for-logistic-regression.zip

# Titanic data

/content/drive/MyDrive/Colab/1_data_download/titanic.zipThen I’ll set up the path to the directory containing the zip file.

# required package

import zipfile

import os

# path to the directory containing the zip file

titanic= "/content/drive/MyDrive/Colab/1_data_download/titanic.zip"

heat_tolerance = "/content/drive/MyDrive/Colab/1_data_download/heat-tolerance-in-wheat-for-logistic-regression.zip"and I’ll set up the file path where I’ll unzip the data.

# Path to the directory where you want to extract the contents

titanic_path = '/content/drive/MyDrive/Google Colab/1_data_download'

heat_tolerance_path = '/content/drive/MyDrive/Google Colab/1_data_download'Then, let’s unzip the data.

# Unzip the file

with zipfile.ZipFile(titanic, 'r') as zip_ref:

zip_ref.extractall(titanic_path)

with zipfile.ZipFile(heat_tolerance, 'r') as zip_ref:

zip_ref.extractall(heat_tolerance_path)Finally, let’s extract files.

# List the contents of the extracted directory

extracted_titanic = os.listdir(titanic_path)

print("Extracted files:", extracted_titanic)

extracted_heat = os.listdir(heat_tolerance_path)

print("Extracted files:", extracted_heat)Now, let’s verify that all the data have been successfully unzipped. All the data were properly extracted to the specified file path.

#to mount Google Drive on Google Colab

from google.colab import drive

drive.mount('/content/drive')

# JSON file download

! mkdir -p ~/.kaggle

! cp /content/drive/MyDrive/Colab/0_archive/kaggle.json ~/.kaggle

! chmod 600 ~/.kaggle/kaggle.json

# to download dataset from Kaggle

!kaggle competitions download -c titanic -p "/content/drive/MyDrive/Colab/1_data_download"

# path to the directory containing the zip file

import zipfile

import os

titanic= "/content/drive/MyDrive/Colab/1_data_download/titanic.zip"

# Path to the directory where you want to extract the contents

titanic_path = '/content/drive/MyDrive/Colab/1_data_download'

# Unzip the file

with zipfile.ZipFile(titanic, 'r') as zip_ref:

zip_ref.extractall(titanic_path)

# List the contents of the extracted directory

extracted_titanic = os.listdir(titanic_path)

print("Extracted files:", extracted_titanic)full code: https://github.com/agronomy4future/python_code/blob/main/How_to_import_Kaggle_datasets_directly_into_Google_Colab.ipynb